Predicting demand and allocating resources better in a virtual network (2018)

Bitesize summary

Paper: Network Function Virtualization in Dynamic Networks: A Stochastic Perspective (2018)

key themes: network function virtualisation (NFV), predicting and adapting to demand in a constantly changing system.

Problem: Network function virtualisation (NFV) involves carrying out network functions using software, rather than separate pieces of hardware – we require an efficient method of processing these virtual requests. Most methods proposed so far would take a lot of time and computational power to do this, and would not work well in the large, complicated, and constantly-changing systems required for 5G.

Proposed solution: Creating a program which anticipates and adapts to the system changes as they happen, and is designed to find an optimal solution in the long run (rather than just at each time) for allocating network resources to these virtual networks.

More details

In this work, the research team are trying to overcome a challenge which results from Network Function Virtualisation (see box, right). Carrying out system functions ‘virtually’ means you need less physical hardware, but you still need to interface with physical machines to transmit the data through the network.

The problem arises when you need to allocate resources in this system. The virtual machines can only do so much at once, and there is only a limited amount of computational power or bandwidth in the cables to send this information efficiently around the network. In addition, the users have wildly different demands, which are difficult to predict.

What is Network Function Virtualisation (NFV)?

It’s a way of running processes that would normally be carried out on physical hardware (e.g. routers or firewalls) by using software. This software runs on a “virtual machine”, hosted by simpler, physical machines. A simple analogy might be: instead of having a separate calculator, telephone, and computer, they can all be combined and run on a modern mobile phone, using the right software that can carry out these same functions with simpler hardware.

How can limited resources be shared out most effectively in this complex system? To work this out, researchers tend to make a computer model of the system, and try to create an algorithm which schedules the resources. They can test this algorithm assuming different demands, and see how effective it is. In this work, the team tries to allocate resources within one network slice.

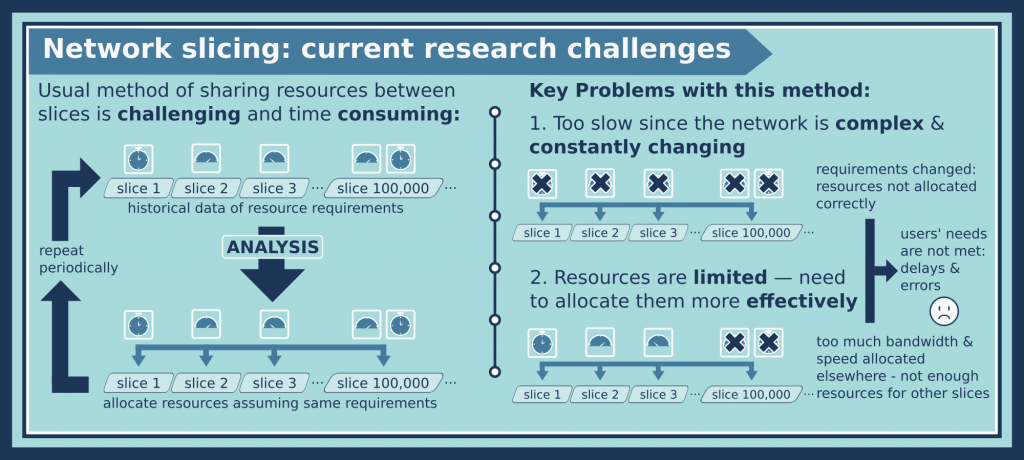

Many studies that try to share out resources in 5G networks have used a ‘deterministic approach’. This means that the researchers typically assume, usually based on what happened in the past, that the network capacity or the demands of the different users are predictable, fixed values. For example: the network has this much bandwidth to offer, and a number of different users require a certain amount of bandwidth at a certain time. They might also consider things like the latency or security – these are examples of ‘quality of service’ requirements. They use these assumptions in their computer model of the system and compute a solution, i.e. try to work out the best way to allocate the different resources. They may need to compute a new solution each time the network changes, which is one simple way to deal with a constantly changing system.

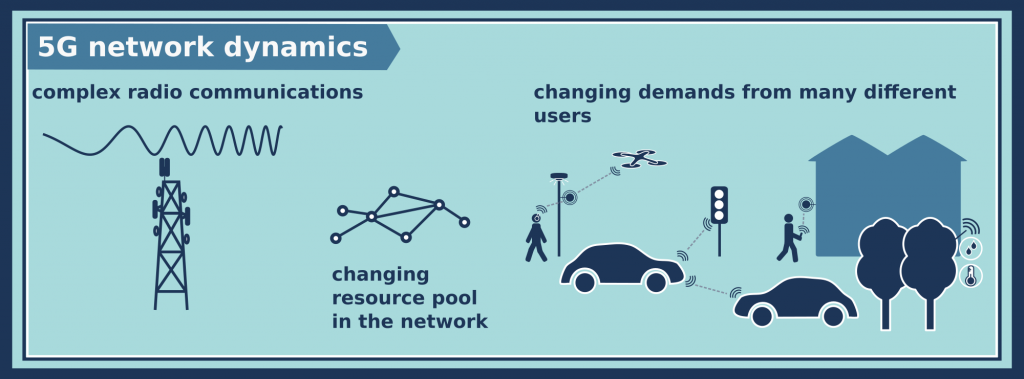

However, this approach won’t work very effectively in a real 5G system, because there are far too many network dynamics (i.e. changes) and uncertainties to account for. It would be too time and resource-consuming to compute a new solution each time, and it would likely also lead to instability in the system too. Many things in the network are dynamic: there are lots of complex and ever-changing radio communications, there are many users with different usage times and demands, and the network resources will likely be shared between many different applications, so the resource pool will be frequently changing. Overall, the resource and traffic conditions are always changing in time.

Ideally, there needs to be a method which anticipates and adapts to these system dynamics as they happen, rather than retrospectively. There has been work carried out by others which has looked into allocating resources in a constantly changing system, but this research paper suggests a more reliable method to do this.

A stochastic method is suggested in this paper (see box, right) which assumes that the demands and resources are randomly changing. The model uses a mathematical technique known as “combinatorial optimisation”, which is used for finding optimal paths through networks (for example in the travelling salesman problem).

The research team develop a program which takes into account past requirements and predicted future requirements, to better allocate resources. Importantly, the program is designed to “play the long game“, making decisions now which may seem sub-optimal, but will work out to give the best results overall in future.

What is a stochastic method?

If something is “stochastic”, it’s changing randomly, and we usually think of it changing randomly in time. A stochastic method uses mathematical techniques to model unpredictable and complex systems, like the movements of each molecule in a gas, or the fluctuations of the stock market – you can’t predict each event, but you can try to predict overall outcomes and trends. In this work, it is the complex network dynamics that are modelled as changing randomly.

The method involves solving a complicated program which is run at the start (which makes an overall strategy for allocating resources), and then only simple calculations need to be carried out as the model network system is running to adapt to the current demand. This is much more preferable than having to run a complicated model at each time step.

In the Wu Lab research group, this work led on to two further papers. In the 2019 paper, the team looked at allocating resources amongst various network slices (rather than looking at one slice as in this work), using machine learning techniques. In the 2020 paper, the team continue the work from this paper to make an algorithm which is even more reliable over a long period. Click on the links below to read more.